Key Points

- Match your threshold model to each metric’s behavior, using static, dynamic, or overlapping types for optimal results.

- Design overlapping bands with separate entry and exit points to prevent alert flapping and ensure stable state transitions.

- Implement time-based dampening and deduplication to filter temporary spikes and consolidate duplicate alerts.

- Connect every alert severity to an automated action, ensuring critical issues trigger immediate remediation.

- Apply standardized threshold patterns by metric family to create scalable, efficient monitoring templates.

- Continuously measure alert volume and resolution times to validate improvements and guide further tuning.

Are you tired of alert storms drowning your team in noise while real issues slip through? The solution lies in strategically designing overlapping monitoring thresholds that create stable, intelligent alerts.

This practical guide will show you how to implement these thresholds with time-based dampening and automated responses to finally achieve quiet, actionable monitoring.

Strategies for tuning overlapping RMM thresholds for improved MSP monitoring

Tuning overlapping thresholds is the key to transforming chaotic alerts into a prioritized action plan for effective MSP monitoring.

📌Use case: Perform these strategies proactively during client onboarding, after major system changes, or reactively to combat alert fatigue and reduce alert noise.

📌Prerequisites: First, inventory all current metrics and thresholds. Then, secure a business agreement on severity definitions and SLAs. Finally, ensure access to your RMM’s alert policies and reporting tools to measure impact on volume and response times.

Strategy 1: Match threshold models to metric behavior

Choose the right threshold model for each metric to make your alerts intelligent and actionable.

Use static thresholds for hard limits

Apply static, fixed values to metrics with absolute limits, like “Disk Space < 10%” or “Service = Stopped.” This model is perfect for clear failure states that require immediate attention.

Use dynamic baselines for fluctuating metrics

For metrics with daily patterns like CPU or network usage, employ dynamic baselines. Your RMM learns normal behavior and alerts only on significant statistical deviations, preventing false alarms during peak times.

Apply overlapping thresholds for volatile resources

Implement overlapping Warning and Critical ranges for volatile metrics like memory usage. This introduces hysteresis, ensuring a brief spike doesn’t cause alert “flapping” and is key to sustained alert noise reduction.

By mapping the model to the metric’s behavior, you create a reliable monitoring foundation that filters noise and highlights real issues. This sets the stage for linking these precise alerts to automated actions.

Strategy 2: Design overlapping bands and reset points

Define clear entry and exit points for each alert state to eliminate bouncing, or “flapping,” notifications.

Configure entry and exit criteria

For each metric, set two values: one to trigger the alert and a stricter one to clear it. This creates a reset gap, or hysteresis, that prevents rapid state changes when a value hovers near a single point.

- Example (CPU): A Warning alert triggers at >85% for 5 minutes, but doesn’t clear until usage drops below 80% for 5 minutes.

- Example (Disk Space): A Critical alert triggers at <10% free but doesn’t clear until space recovers to above 15% free.

This hysteresis principle ensures metrics must prove sustained recovery before alerts clear, eliminating noise from temporary fluctuations in Windows 11 performance metrics.

This strategy will help you achieve stable state transitions where each incident generates a single, reliable alert instead of multiple bouncing notifications.

Strategy 3: Implement time-based dampening and deduplication

Add time and grouping rules to filter temporary spikes and prevent alert storms.

Require a minimum duration for alerts

Configure your RMM to only trigger alerts after a breach persists for a specific time. For example, require CPU to stay above 95% for 3 minutes before creating a Critical ticket, effectively ignoring short-lived spikes common on Windows systems.

Group and suppress duplicate alerts

Activate deduplication to merge repeated alerts for the same issue into a single incident ticket with a count. Set a cooldown period (e.g., 2 hours) where the same alert on the same device does not create a new ticket, which is crucial for managing correlated events like a network switch failure affecting multiple endpoints.

This method separates fleeting anomalies from real problems by filtering transient noise and grouping correlated events. It’ll help you receive fewer, more actionable alerts that represent genuine, sustained issues, enabling faster root cause resolution.

Strategy 4: Map severity to action and automation

Connect every alert severity to a specific, automated response to ensure consistent and efficient resolution.

Assign tiered responses

Configure your RMM to trigger different actions based on severity. For Warning alerts, automatically create a low-priority ticket with runbook links. For Critical alerts, immediately page the on-call technician and trigger safe automated remediation scripts, such as a disk cleanup on a Windows device.

Verify automated remediation

After an automation script runs, program your RMM to perform a follow-up metric check (e.g., re-check disk space) to verify the issue is resolved before automatically clearing the alert.

This method ensures the right response matches the urgency of the problem. It is fundamental for transforming your MSP monitoring from a passive alerting system into an active management platform.

Strategy 5: Apply patterns by metric family

Standardize your monitoring approach by applying consistent threshold patterns to groups of similar metrics.

Implement family-specific templates

Configure your RMM using these proven patterns for common metric families:

- CPU/Memory: Use dynamic baselines with overlapping bands and 3-10 minute dampening to handle normal fluctuations on Windows 11 systems.

- Disk Space: Apply static thresholds with wide reset gaps (e.g., warn at 15%, clear at 20%) and enable trend-based warnings for gradual capacity issues.

- Services/Processes: Set immediate Critical alerts for stopped services with short retry attempts, but only clear after sustained stable operation.

- Network Performance: Utilize baseline thresholds with time-of-day profiles and aggregate interface alerts at the device level to monitor connectivity holistically.

This approach creates scalable, sensible defaults that reduce manual configuration effort while maintaining precision. By treating metric families as unified patterns rather than individual settings, you achieve consistent MSP monitoring across all devices and ensure reliable alert noise reduction through proven templates.

Strategy 6: Measure results and iterate

Continuously validate and refine your thresholds using data to demonstrate tangible improvement.

Track key performance metrics

Compare critical data before and after your changes: total alert volume, duplicate alerts, Mean Time to Acknowledge (MTTA), and the percentage of alerts auto-resolved. This provides clear evidence of alert noise reduction and efficiency gains.

Conduct regular reviews

Schedule weekly sessions to analyze the top “noisy” metrics, adjusting threshold bands, dampening periods, or automation safeguards based on actual performance data from your Windows 11 endpoints.

Report on progress

Create a simple monthly scorecard for each client that highlights reductions in alert noise and resolution times, clearly showing the return on investment from your refined MSP monitoring strategy.

This will give you quantifiable proof that your tuning efforts are working, along with a prioritized list of targeted improvements to tackle next, creating a cycle of continuous optimization.

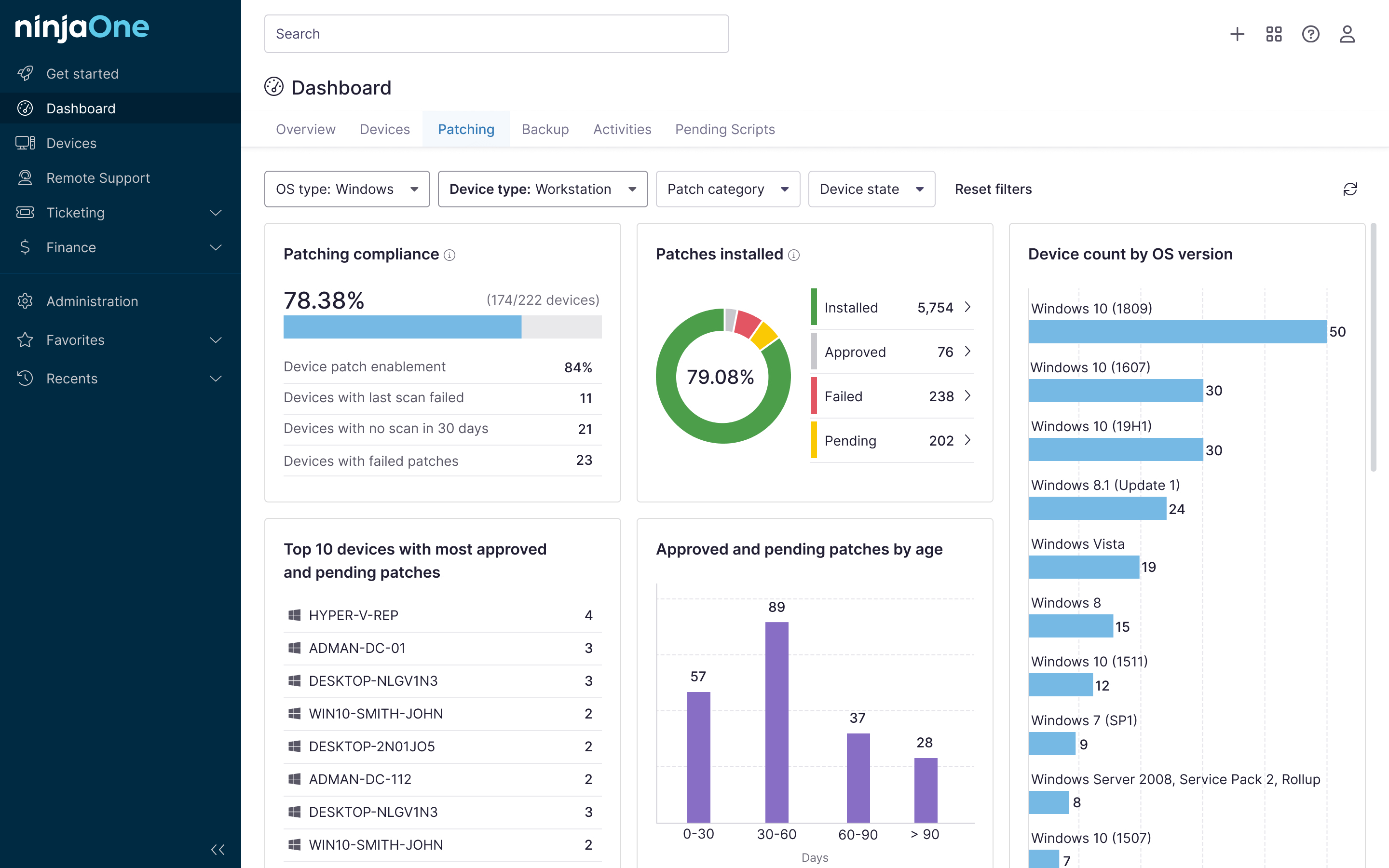

How NinjaOne fine-tunes overlapping monitoring thresholds for MSPs

NinjaOne provides integrated tools to efficiently operationalize these threshold-tuning strategies.

- Policy deployment: Deploy consistent monitoring policies per client or site using templates featuring overlapping bands, dampening windows, and severity-based actions, ensuring standardized protection for all Windows 11 devices.

- Automation & verification: Attach remediation scripts directly to Critical alerts; the platform can run post-fix verification checks and automatically write script outputs back to the ticket for auditability.

- Noise controls: Activate built-in alert deduplication, set cooldown windows to prevent storms, and configure tickets to auto-close when metrics return to their clear band for hands-off alert noise reduction.

- Reporting & dashboards: Leverage dashboards to track key MSP monitoring metrics, such as alert volume, duplicates avoided, auto-remediation success rates, and MTTR trends, providing clear evidence of improvement for each client.

This approach allows you to consistently apply, automate, and measure your tuning playbook at scale, turning individual configurations into a managed service offering.

Ready to operationalize your threshold tuning? Use NinjaOne to roll out layered policies, auto-remediate Critical alerts with verification, and quiet the noise with dedupe & cooldowns, then prove the impact in dashboards.

→ Explore how NinjaOne tracks alert volume, duplicates avoided, auto-remediation success, and MTTR trends

Achieve reliable monitoring with overlapping thresholds

By implementing overlapping monitoring thresholds with strategic dampening and severity-based automation, you transform chaotic alerts into a trustworthy signal system.

Remember to use static thresholds for absolute limits, dynamic baselines for patterned metrics, and always verify remediation before clearing alerts. This disciplined approach enables MSPs to eliminate alert fatigue, resolve real incidents faster, and deliver consistently superior client service.

Related topics

- Server Monitoring and Alerting

- How to Add or Remove “Processor Performance Decrease Threshold” from Power Options in Windows

- Using RMM Dashboards and Other Tools to Track Supporting Metrics for SLA Monitoring

- Guide for MSPs: How to Use an RMM Platform

- 28 Essential IT Automation Examples for Proactive MSP Monitoring, Alerting, and Remediation