Key Points

- Use continuous exercises because short, frequent drills keep the incident response plan current.

- Reconstruct evidence-driven incident timelines to expose gaps and incorrect assumptions.

- Utilize lightweight RCAs or quick post-incident reviews to turn findings into concrete updates.

- Maintain versioned documents such as playbooks, comms trees, due dates, and change logs.

- Measure readiness using standard metrics to track, detect, contain, and recover performance across clients.

Incident response plans often age quickly as tooling changes, staff rotate, and threats evolve. Ongoing exercises help keep plans afloat, making it essential to modernize incident response plans. This article will show how to operationalize plan upkeep.

Modernizing incident response plans with timeline-driven exercises

Modernizing incident response plans means finding process gaps, refining timelines, running exercises, updating documentation, measuring readiness, and aligning with partners.

📌 Prerequisites:

- Defined roles and on-call structure for incidents and exercises.

- Access to primary evidence sources such as EDR logs, Windows event logs, SaaS audit logs, email, and identity audit trails.

- Central document storage with version control and read access for all responders.

- Ticketing and communications channels for drills and real incidents.

- Stakeholder agreement on metrics and reporting cadence.

Step 1: Map where plans fail

This step identifies high-risk failure points before the following incidents.

📌 Use Case: During a recent system outage, the IT operations team took longer than expected to restore services because of confusion around who owned specific actions, missing access to diagnostic tools, and unclear approval chains for emergency changes.

Review past incidents

Review a recent major accident and gather all postmortems, chat transcripts, and incident timelines. Look at moments that may have caused confusion and slowed progress.

List specific pain points

Document issues that could have caused the incidents. Common ones may include:

- Missing contacts: No clear on-call list or outdated team directory.

- Unclear handoffs: Incident response ownership shifts without communication.

- Tool access delays: Engineers waiting for temporary permissions or VPN access.

Create a backlog and assign owners

Create a backlog with:

- Each issue as a separate task

- An owner accountable for resolution

- A due date for implementation

Step 2: Build accurate incident timelines

This step turns raw telemetry into a shared narrative that drives fixes.

📌 Use Case: After a ransomware attempt was detected, the security team realized three hours had passed between the first suspicious activity and the first containment action. The team struggled because logs were scattered across multiple systems, and timestamps were not synchronized.

Gather data sources

Collect evidence from systems that contributed to the incident. Use common sources such as:

- Endpoint and server logs

- Email audit logs

- Network telemetry

- SaaS admin logs

- Ticketing system timestamps

Standardize the timeline template

Use a structured timeline format so responders speak the same language when reviewing events.

Run a 60–90 Minute Timeline Workshop

Gather everyone involved in the incident for a focused session. During the workshop, ensure you:

- Review each event entry.

- Validate times and actions with real evidence (log entries, alerts, emails).

- Identify missing context or conflicting timestamps.

- Highlight critical decision points

Convert delays into action items

Mark the time gaps between detection, containment, and recovery. Then, turn each gap into a specific improvement task.

Step 3: Run small-scope exercises

This step keeps the plan aligned with current tools.

📌 Use Case: The security team runs a tabletop exercise simulating a cloud credential leak. During the session, they realize the vendor escalation contact list is outdated, and several engineers are unsure how to initiate containment in the new ticketing system.

The following are examples of small-scope exercises:

Tabletop exercise

Tabletop exercises are structured walk-throughs of realistic scenarios. You’ll use annotated timelines, communication templates, and playbooks to evaluate decision-making and role clarity here.

Functional exercise

Functional exercises test one workflow end-to-end to ensure it works as intended. You want to use live systems, documented Standard Operating Procedures (SOPs), and approval chains.

Live exercise

Lastly, live exercises confirm operational readiness without affecting production systems. Examples of a live exercise are sending a test page, checking VPN access, or initiating a comms channel switch.

You want to establish a cadence for these exercises (monthly, quarterly, semi-annually, etc.) and keep them under 90 minutes.

Step 4: Update documentation

This step maintains living, reliable incident documents.

📌 Use Case: During a weekend data breach investigation, the incident commander tried escalating to the vendor manager but found that the contact had left before. After the incident, leadership mandated version-controlled updates to ensure every document now includes a version number, owner, and review history.

The following are sample documents to maintain:

- Contact and escalation trees: Primary and alternate contacts, time-of-day or region-specific escalation paths, and vendor/emergency numbers.

- Role guides: Incident commander, communications lead, forensics lead, vendor manager.

- Playbooks for common incidents: Ransomware, Business Email Compromise (BEC), credential theft, insider misuse, DDoS, and data exposure.

- Communications templates: Executives, legal, clients, and vendors.

- Evidence collection checklist: Log sources to capture, storage locations, and integrity verification steps.

Step 5: Measure readiness

📌 Use Case: Following a simulated credential compromise exercise, the incident response team calculated key metrics with the drill revealing only 60% of teams participated in an incident exercise during the quarter, and several open action items from the last post-incident review were still unresolved past their Service Level Agreement (SLA).

Take note of the following to check readiness:

- Mean Time to Detect (MTTD): Average time between when the incident began and when it was detected.

- Mean Time to Contain (MTTC): Average time between detection and when the incident’s impact is successfully limited.

- Mean Time to Recover (MTTR): Average time from containment to complete restoration of regular service.

- Drill coverage by scenario and team: Percentage of key scenarios tested per period, and percentage of teams participating.

- Open action items older than the SLA: The number or percentage of unresolved improvement tasks past their assigned due date.

Step 6: Align with vendors and third parties

📌 Use Case: During a SaaS outage that affected customer access, the incident commander discovered the vendor escalation contact was outdated. The team had to search through old tickets to find the new representative. When the case was finally opened, critical logs were missing.

Maintain a vendor contact list

Include primary and secondary contacts, after-hours and escalation procedures, contact numbers, service tiers, time zones, and preferred communication channels.

Document required data for vendor cases

When opening a vendor ticket, ensure you have relevant data ready. Each vendor has different requirements, so it’s crucial to define them beforehand to avoid conflict. Typical data include:

- Timestamps of detection and impact.

- Affected assets or users.

- Log extracts or relevant telemetry.

- Error codes or alert IDs.

- Internal ticket reference numbers.

Include a vendor response drill

Validate that vendor escalation processes work in practice. Stimulate a scenario that requires vendor support, then follow the documented contact and case-opening process. Lastly, verify response time, communication flow, and data accuracy.

Continuous maintenance and integration

- Schedule quarterly reviews of vendor contacts and procedures.

- Store the vendor registry in the same repository as your other incident response artifacts.

- Tag each entry with an owner, last verified date, and subsequent review date.

- Share updates in the incident responder Slack channel and ticketing system.

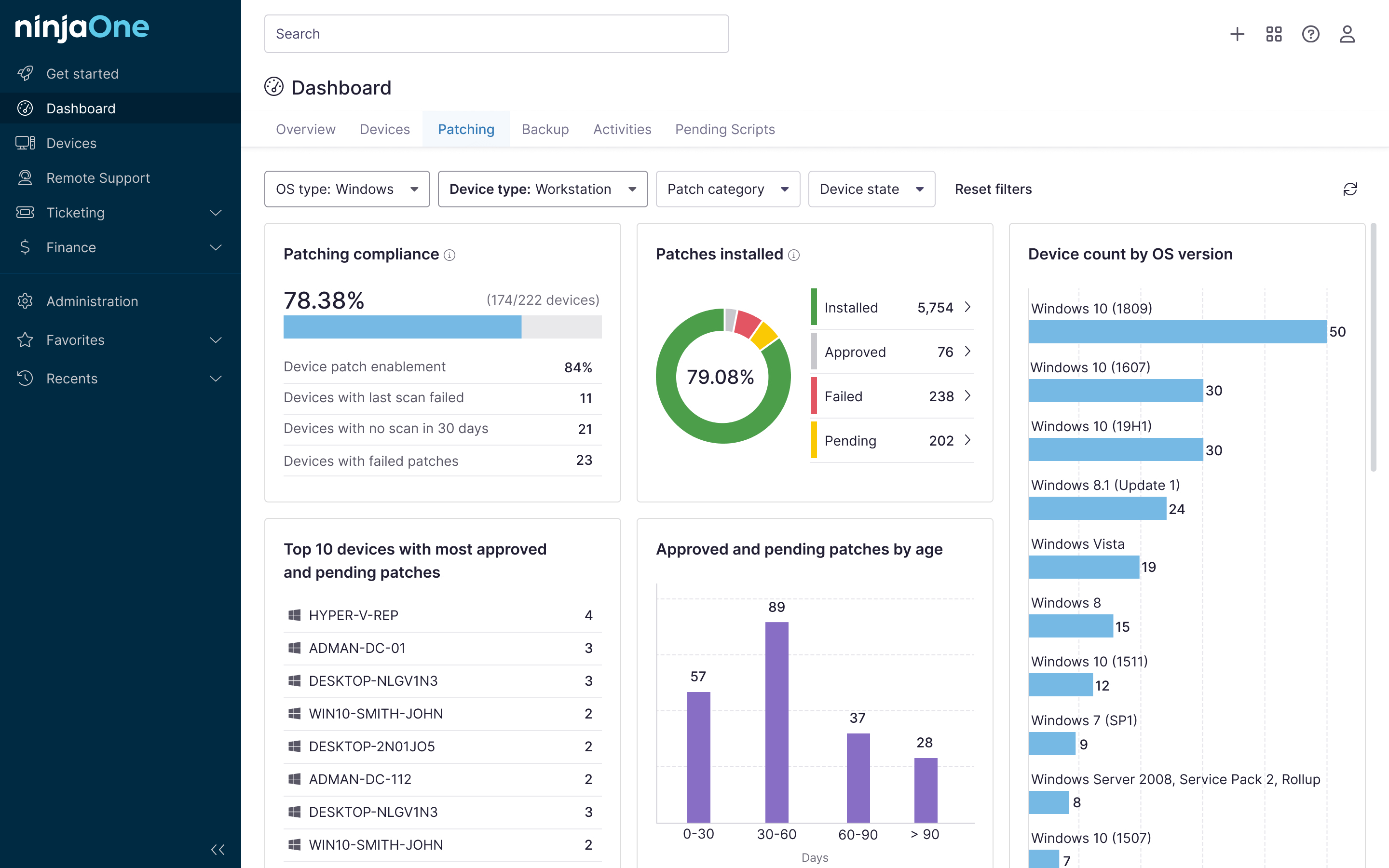

NinjaOne services that help modernize incident response plans

NinjaOne helps modernize incident response plans using the following services:

Automation and evidence collection

With NinjaOne, you can schedule scripts to export logs. Scheduled automation features let you create schedule scripts, export logs, and run custom scripts on a schedule. In addition, this feature supports different script types and parameters.

Detection and tagging

NinjaOne lets you surface signals and tag devices or tickets. NinjaOne’s advanced detection capabilities include custom alerts, device tagging, conditioning-based monitoring, and more.

Reporting

You can generate performance scorecards, which include customizable report templates and PDF report generation with NinjaOne’s IT reporting tools.

Runbook distribution

NinjaOne’s documentation and runbook management include secure storage, a centralized documentation system, and more.

Quick-Start Guide

NinjaOne can help modernize your incident response plan with timeline-driven exercises. How NinjaOne Supports This:

1. Automated Alerts & Monitoring:

- NinjaOne provides real-time monitoring and alerts for endpoints, helping detect incidents early.

- You can set up automated responses to contain threats quickly.

2. Centralized Management:

- All devices and incidents are managed in one dashboard, making it easier to coordinate responses.

- You can track the status of each incident and ensure timely resolution.

3. Integration with PSA/Ticketing Tools:

- NinjaOne integrates with platforms like ServiceNow, allowing you to create incidents automatically and assign them to the right teams.

- This streamlines your incident response workflow and ensures accountability.

4. Reporting & Analytics:

- Detailed reports help you analyze incident trends and identify areas for improvement.

- Use these insights to refine your incident response plan and conduct timeline-driven exercises effectively.

Modernizing your incident response plans

To keep incident response plans effective, they must be exercised, measured, and updated regularly. Timeline-driven drills, lightweight RCAs, versioned documents, and clear ownership help ensure accuracy and accountability. With a steady, consistent cadence, your plan stays current, fast, and trusted.

Related topics: