As you integrate artificial intelligence (AI) and machine learning (ML) technologies into your business operations, you’ll notice the sophistication of cyber threats against these systems rising. Among these emerging threats, data poisoning stands out due to its potential to manipulate and undermine the integrity of your AI-driven systems. Understanding and mitigating data poisoning risks will help you maintain the security and reliability of your AI and ML applications.

Data poisoning in AI and ML

Data poisoning is a form of cyberattack where a malicious actor manipulates the training data sets. This intentionally skews the results to sabotage your system operations, introduce vulnerabilities, or influence the model’s predictive or decision-making capabilities.

How data poisoning attacks work

Data poisoning attacks exploit the large, diverse training data of your AI and ML models by inserting false or misleading data — which can significantly alter their behavior. This manipulation can be subtle, such as slightly altering the data inputs to degrade your model’s performance over time, or it can be more direct and destructive, aimed at causing immediate and noticeable disruptions.

Cybercriminals use various tactics to introduce errors into AI models, leading to compromised decision-making processes. Here are some data poisoning attack examples:

- Backdoor (label) poisoning: Injects data into the training set to create a ‘backdoor,’ allowing attackers to manipulate the model’s output when specific conditions are met. The attack can be targeted, where the attacker tries to make the model produce a certain behavior, or non-targeted, which generally disrupts the model’s overall functionality.

- Availability attack: Disrupts the availability of systems or services by degrading performance or functionality, such as inducing system crashes or generating false positives or negatives.

- Model inversion attack: Uses the model’s outputs to infer or recreate the training dataset. This attack often comes from insiders with access to the system’s responses.

- Stealth attack: Involves gradually altering the training dataset or injecting harmful data stealthily to avoid detection, leading to subtle yet impactful biases in the model over time.

Along with these data poisoning attack examples, attackers use many more tactics to exploit AI systems, making it important to incorporate security at every stage of AI development.

The impact of data poisoning on businesses and IT infrastructures

Consider this scenario: Your company in the financial sector uses AI models for fraud detection. An attacker introduces anomalies or incorrect labels into the training data that cause the model to classify fraudulent transactions as legitimate. This allows them to siphon off funds undetected, resulting in significant financial losses and erosion of customer trust.

In this case, the implications of data poisoning extend beyond just operational disruptions; they pose significant risks to the security, reputation, and financial health of your business.

If you often deploy AI solutions for operational efficiency and threat detection, you may find yourself particularly vulnerable. A successful data poisoning attack can compromise your insights, lead to flawed decision-making, and expose your business to severe security breaches and compliance risks.

Moreover, the subtle nature of such attacks can allow them to go undetected for long periods, causing cumulative damage that is complex and costly to undo once discovered. This extended impact can also lead to lost customer trust and potentially devastating legal and financial repercussions for your organization.

Strategies and defenses for data poisoning attacks

Bolstering your defenses for data poisoning attacks requires a multifaceted approach centered around robust security protocols and vigilant management of your AI models. Fortify your defenses against data poisoning attacks with these key strategies:

Enhanced data validation and filtering

Implement rigorous checks to validate and filter input data using advanced algorithms to analyze the incoming data for inconsistencies, anomalies, or patterns that deviate from established norms.

Techniques such as statistical analysis, anomaly detection algorithms, and machine learning models can be employed to automatically flag and review suspicious data. This helps ensure you use only clean, verified data for training AI models, which significantly reduces the risk of poisoning.

Secure model training environments

Establish secure environments for AI training to shield the data and models from external threats. This includes using virtual private networks (VPNs), firewalls, and encrypted data storage solutions to create a controlled and monitored environment.

Tightly regulate access to these environments through role-based access controls (RBAC) so that only authorized personnel can interact with the AI systems and training data.

Continuous model monitoring

Continuously monitor AI models to track their performance and outputs to detect any unusual behavior that may indicate a data poisoning attack. This can be achieved through real-time performance dashboards and alert systems that notify the team when predefined thresholds are breached. Perform regular audits and updates to the model’s decision-making processes to adapt to new threats and changes in the operational environment.

Diverse data sources

Use data from multiple reliable sources to reduce the impact of any single source being compromised. Integrating data from varied sources not only enriches the training set but also introduces redundancy that can safeguard against targeted data manipulation. Periodically review and vet sources to maintain the reliability and integrity of the data pool.

These strategies can bolster your defenses against data poisoning and enhance the overall security and reliability of your AI applications.

Future trends and precautions in AI security against data poisoning

As technology evolves, so do the tactics of cyber attackers. Future developments in AI and ML are likely to introduce new forms of data poisoning, necessitating even more sophisticated countermeasures. Anticipating these changes, businesses must remain proactive and adopt the latest security technologies and practices to ensure adequate defenses for data poisoning attacks.

Emerging tools like artificial immune systems, which can detect and respond to threats in a manner akin to biological immune systems, represent the forefront of preventative technologies. Similarly, advancements in encryption and blockchain technology could provide new ways to secure training data against tampering.

Reduce your risk of cyber threats with proactive AI security measures

Data poisoning is a potent and evolving threat to your artificial intelligence and machine learning systems. Understanding the nature of these attacks, implementing a robust security framework, staying informed of the latest trends in AI security, and adopting proactive measures will help you safeguard your digital assets and maintain the integrity of your AI-driven operations.

It is imperative for you, as security teams and IT leaders, to not only focus on current threats but also anticipate future vulnerabilities. Staying ahead of cyber threats is not just about protection — it’s about ensuring sustainability and trust.

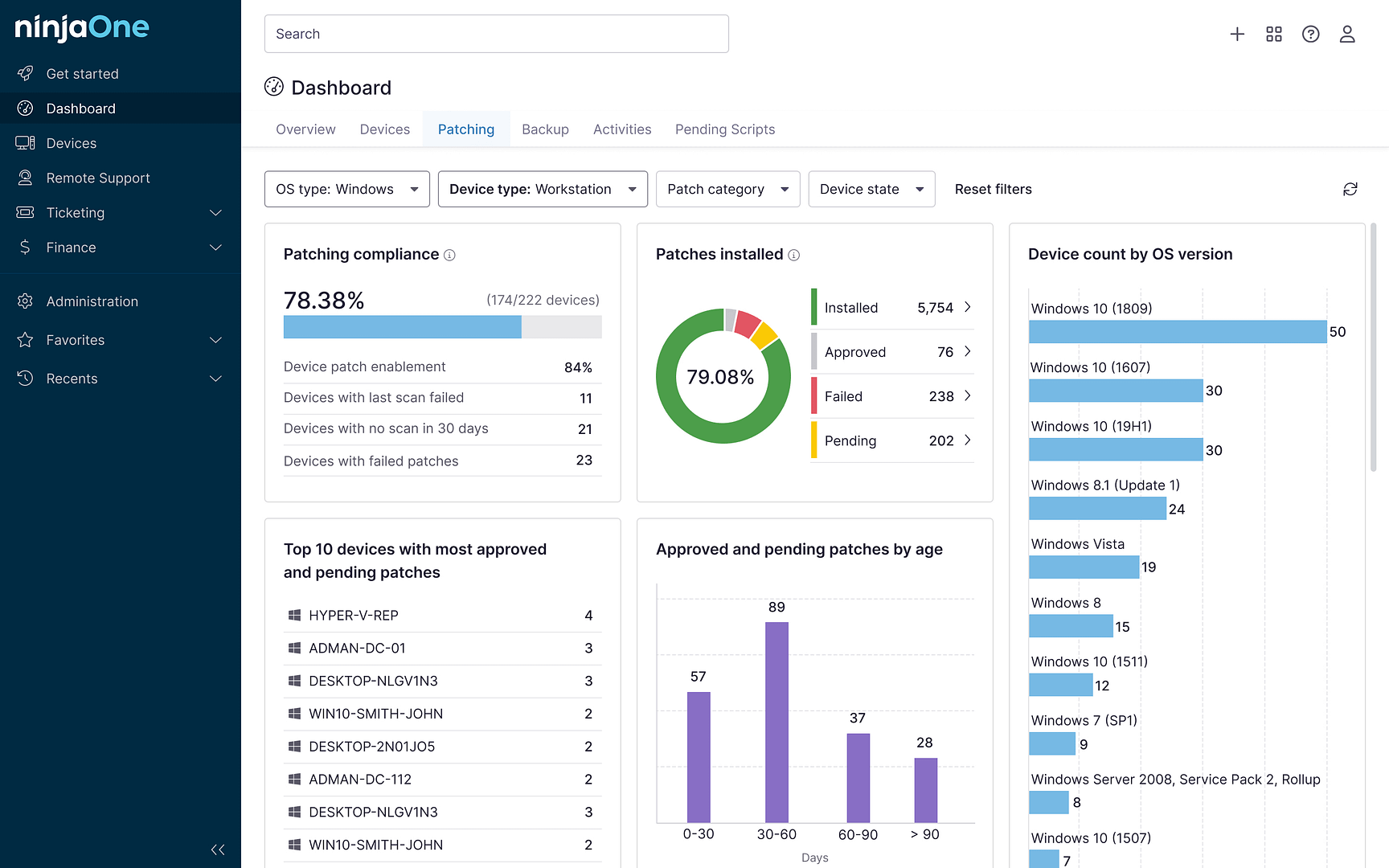

The types of cyber threats your organization might encounter are diverse and constantly evolving, but with the right approach, you can significantly reduce your risk. It also helps to have an RMM and IT management solution, such as NinjaOne, that allows you to set the foundation for a strong IT security stance. With automated patch management, secure backups, and complete visibility into your IT infrastructure, NinjaOne RMM helps you protect your business from the start.