Large-scale AI transformations are often overpromising outcomes and underdelivering on timelines. In contrast, organizations seeing meaningful results from AI take a more pragmatic path. They adopt AI in controlled, incremental phases, validating performance at each step. This measured approach consistently leads to improved operational efficiency and cost savings.

In IT operations, the most effective teams build AI capability layer by layer, ensuring each element delivers value before scaling further.

What is an AI stack for IT operations?

An AI stack for IT operations represents a coordinated collection of tools, platforms, and processes that work together to automate and enhance specific IT management tasks.Unlike standalone AI tools or ad-hoc implementations that might offer limited, isolated benefits, a unified AI stack addresses concrete challenges like incident response, performance monitoring, capacity planning and routine maintenance tasks by integrating these capabilities into a cohesive system.

Components of an effective AI stack

Building an effective AI stack requires an understanding of how machine learning (ML) components work together to create operational value. Rather than viewing these as separate tools, you must treat them as integrated layers that build upon each other to deliver measurable improvements.

Data collection and monitoring tools

Your existing monitoring infrastructure serves as the foundation for AI capabilities, making this the logical starting point for any implementation. Most organizations already collect operational data through network monitoring, server performance metrics, application logs, and user activity tracking.

Consider these key capabilities when evaluating or enhancing your data collection layer:

- Real-time ingestion: Pulls data from servers, networks, apps, and security tools with sub-second latency.

- Data retention: Keeps 12–24 months of history for trends and seasonal planning.

- Log normalization: Standardizes formats so you can analyze everything in one place.

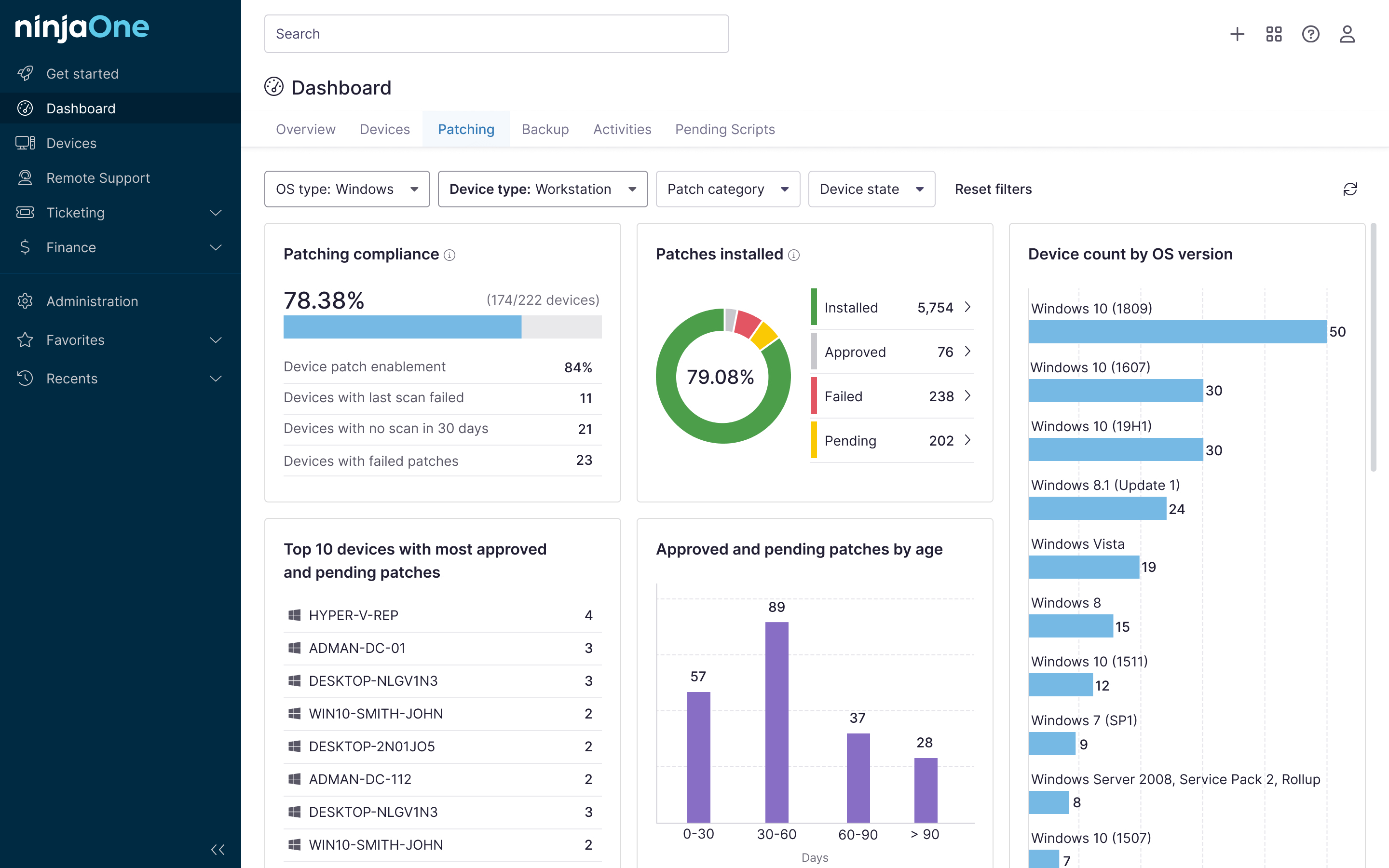

- Tool integration: Works with tools like NinjaOne without requiring replacement.

Machine learning and analytics platforms

The analytics layer transforms your monitoring data into actionable insights through pattern recognition and predictive modeling. Modern ML platforms designed for IT operations focus on practical applications like anomaly detection that reduces false positives and improves capacity forecasting, as well as incident correlation that identifies root causes within minutes rather than hours.

These platforms should integrate seamlessly with your data collection tools and provide clear, actionable recommendations. The most effective solutions offer pre-built models for common IT scenarios while allowing customization for your specific environment and operational patterns.

Automation and orchestration layers

Automation capabilities turn analytics into action by using identified patterns and predicted problems to trigger consistent, rule-based responses across your environment. This layer handles routine tasks like server provisioning, patch management, backup scheduling, and incident response workflows.

Orchestration tools coordinate these automated actions across different systems and teams, ensuring consistent execution and proper documentation. The key is starting with simple, well-understood processes before expanding to more complex scenarios that require human oversight.

Integration and API management systems

Integration complexity represents the primary barrier preventing many organizations from starting their AI journey, with 95% of IT leaders citing integration challenges as a barrier to implementing AI effectively. That said, 55% report that API management systems improve their IT infrastructure and provide the connectivity framework that allows different components to share data and coordinate actions.

These systems handle authentication, data transformation, error handling and performance monitoring across all your integrated tools. Strong integration capabilities reduce the technical debt associated with connecting multiple vendors and platforms while maintaining security and compliance requirements through centralized policy management.

How to create an AI stack for IT step-by-step

Creating an effective AI stack requires systematic planning and execution rather than attempting to implement everything simultaneously. This allows you to validate each component’s value before adding complexity.

Assess your current IT infrastructure and needs

Understanding your existing capabilities and specific operational challenges provides the foundation for making informed technology decisions. Start by documenting your current monitoring tools, data sources, automation scripts, and integration points using a structured assessment framework.

Here are the essential areas to evaluate:

- Monitoring coverage: Identify visibility gaps and measure coverage by system type.

- Data quality: Assess completeness and accuracy across existing sources.

- Automation: Document current automation scope and track success rates.

- Integration complexity: Map API endpoints, data formats, and sources of technical debt.

- Team capacity: Gauge workload, skill gaps, and training needs for new tools and processes.

Select the right AI tools for IT operations

Tool selection should prioritize operational fit and integration capabilities over impressive feature lists or marketing claims. Focus on solutions that complement your existing infrastructure rather than requiring wholesale replacement, which significantly reduces implementation costs.

Evaluate vendors based on their ability to work with your current data sources, their track record with similar organizations in your industry and their approach to gradual implementation. Start with tools that offer 14-30 day proof-of-concept periods and can demonstrate measurable value within 90 days of deployment.

Plan your integration and deployment strategy

Integration planning determines whether your AI stack becomes a valuable operational asset or an expensive maintenance burden. Start with a single use case that addresses a clear operational pain point and has measurable success criteria, such as reducing incident response time or decreasing manual intervention.

Design your integration architecture to support gradual expansion while maintaining system stability through proper API versioning and rollback procedures. Document your deployment timeline with specific validation checkpoints that must be met before proceeding to the next phase, including performance benchmarks and user acceptance criteria.

Test and validate your AI stack performance

Validation ensures each component delivers the promised value before you invest in additional capabilities. Establish baseline metrics for the operational challenges you are addressing, then measure improvement after implementing each stack component using statistical significance testing. Focus on practical metrics like reduced incident response time, improved system uptime, or decreased manual intervention requirements.

Best practices for AI stack implementation

Successful AI stack implementations follow proven practices that maximize value while minimizing risk and disruption. This often requires careful sequencing of technology rollouts, thorough validation of each integration point, and ongoing measurement of operational impact to ensure each layer of the stack delivers tangible improvements.

These proven practices will help you avoid common pitfalls and maximize your investment:

- Start with high-value, low-risk use cases that demonstrate clear operational benefits within 60-90 days.

- Maintain existing operational procedures while gradually introducing AI-enhanced processes through parallel testing.

- Invest in team training and change management to ensure successful adoption, allocating a portion of the project budget to training.

- Document all integrations and configurations to support ongoing maintenance and troubleshooting with detailed runbooks.

- Establish clear governance policies for AI decision-making and human oversight requirements, defining escalation procedures.

Optimizing your AI stack for long-term success

Long-term optimization requires continuous refinement based on operational experience and changing business requirements. The biggest challenge after initial implementation involves knowing what to do next and how to expand capabilities without disrupting proven processes that are already delivering value.

Focus on gradually expanding successful use cases rather than constantly adding new tools or vendors, which maintains system stability while building expertise. Regular quarterly performance reviews help identify optimization opportunities and ensure your AI stack continues delivering measurable value with documented improvements in key operational metrics.

Streamline your IT stack with automated RMM

NinjaOne’s RMM platform brings automation, seamless integrations, and centralized management to every layer of your IT stack, making it easier to deploy, monitor, and secure modern infrastructure. Its intuitive interface and robust automation tools help IT teams reduce manual effort and accelerate operational efficiency. Ready to optimize your IT stack? Start your free trial today!