Effective log management and data analysis are vital components of a robust IT infrastructure. They empower organizations to proactively manage their systems, identify and address potential issues, and maintain high levels of performance and security. In this context, the ELK stack plays an indispensable role. It provides a unified framework for managing, analyzing, and visualizing data, thereby simplifying and streamlining these critical operations.

The ELK stack (or just ELK) has undergone significant evolution since its inception. Initially focused on log management, it has expanded its capabilities to become a comprehensive tool for handling a wide range of analytics tasks. This evolution is a testament to the growing demand for integrated solutions capable of managing the complexities associated with Big Data. ELK stands out as a prime example of this trend, making sophisticated data analysis more accessible and actionable for businesses and IT professionals alike.

What is the ELK stack?

ELK is an acronym that stands for Elasticsearch, Logstash, and Kibana. Together, these three components provide a powerful, integrated solution for managing large volumes of data, offering real-time insights and a comprehensive analytics suite.

- Elasticsearch is at the core of the stack. It acts as a highly efficient search and analytics engine, capable of handling vast amounts of data with speed and accuracy.

- Logstash is the data processing component of the stack. It specializes in collecting, enriching, and transporting data, making it ready for analysis.

- Kibana is the user interface of the stack. It allows users to create and manage dashboards and visualizations, turning data into easily understandable formats.

ELK’s emergence as a key tool in the Big Data era is a reflection of its ability to address the complex challenges of data management and analysis. It has become a go-to solution for organizations looking to harness the power of their data.

The synergy between Elasticsearch, Logstash, and Kibana is the cornerstone of ELK’s effectiveness, truly transforming the whole into something greater than its parts. Each component complements the others, creating a powerful toolkit that enables businesses to transform their raw data into meaningful insights. This synergy provides sophisticated search capabilities, efficient data processing, and dynamic visualizations, all within a single, integrated platform.

Key components of the ELK stack

Elasticsearch

At its heart, Elasticsearch is a distributed search and analytics engine. It excels in managing and analyzing large volumes of data.

Its main features include:

- Advanced full-text search capabilities.

- Efficient indexing for quick data retrieval.

- Powerful data querying functions.

Elasticsearch is renowned for its scalability and reliability, especially when dealing with massive datasets. It is designed to scale horizontally, ensuring that as an organization’s data requirements grow, its data analysis capabilities can grow correspondingly.

Logstash

Logstash plays a pivotal role in the ELK stack as the data collection, transformation, and enrichment tool. It is versatile in handling a wide range of data sources and formats, including both structured and unstructured logs. The plugin ecosystem is a significant feature of Logstash, allowing users to extend its functionality with custom plugins tailored to specific needs.

Kibana

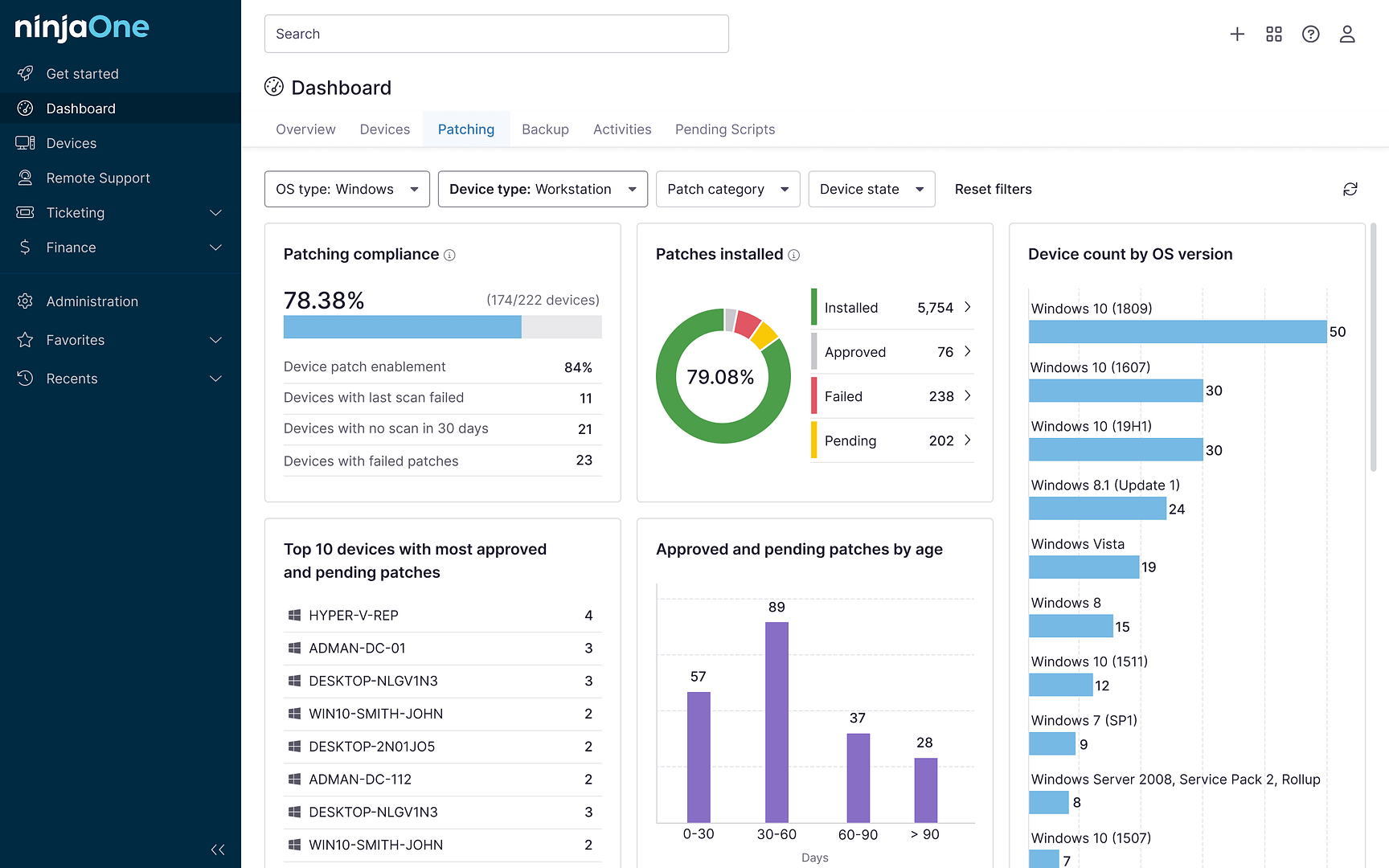

Kibana acts as the window into the ELK stack, providing a powerful platform for data visualization and exploration. It enables users to create various visual representations of data, such as dynamic, real-time dashboards and detailed charts and graphs for in-depth data analysis. Kibana is designed with user experience in mind, offering an intuitive interface that allows for easy navigation and extensive customization options.

ELK’s functionality and benefits

Log management and analysis

ELK excels in centralizing log storage and facilitating comprehensive log analysis. It supports real-time log processing and efficient indexing, enabling quick data retrieval and analysis.

Data visualization and dashboards

Kibana is a powerful tool for creating interactive visualizations and dashboards. These visualizations help in extracting actionable insights from log data, making complex data sets understandable and useful.

Monitoring and analytics

ELK is highly effective for performance monitoring and system analytics. Its capabilities extend to detecting anomalies, aiding in troubleshooting issues, and optimizing overall IT infrastructure. Advanced applications of the ELK stack include predictive analytics and machine learning, demonstrating its versatility and adaptability to various use cases.

Installing ELK

One of ELK’s key strengths is its versatile and networked nature, allowing for a range of deployment configurations. It can be installed on a single machine, which is an excellent approach for smaller setups or initial testing environments. However, for more robust, distributed, or horizontally scaled networks, each component of the ELK stack can be deployed on separate servers. This scalability ensures that your ELK deployment can handle growing data loads and diverse operational demands effectively.

As we delve into this Linux installation for ELK, it’s crucial to consider your specific infrastructure needs, as the setup process can vary significantly based on whether you’re aiming for a single-node installation or a more complex, distributed environment.

Step 1: Update your system

Update your system to the latest packages:

sudo yum update

Step 2: Install Java

Elasticsearch requires Java, so install the latest version of OpenJDK:

sudo yum install java-latest-openjdk

After the installation, you can verify the Java version:

java -version

Step 3: Set up your repository:

All the main ELK components use the same package repository, in case you need to install it on different systems.

- Import the Elasticsearch public GPG key into RPM:

sudo rpm –import https://artifacts.elastic.co/GPG-KEY-elasticsearch

- Create a new repository file for Elasticsearch:

sudo vim /etc/yum.repos.d/elastic.repo - Add the following contents:

[elastic-8.x]

name=Elastic repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md - Update your local repository database:

sudo yum update

Step 4: Install Elasticsearch, Logstash, and Kibana

- If you’re installing ELK on one system, run the following line. Should you need to install ELK on separate servers, simply omit whichever package names aren’t required:

sudo yum install elasticsearch kibana logstash

- Enable and start the Elasticsearch service:

sudo systemctl enable elasticsearch

sudo systemctl start elasticsearch

- Enable and start the Logstash service:

sudo systemctl enable logstash

sudo systemctl start logstash

- Enable and start the Kibana service:

sudo systemctl enable kibana

sudo systemctl start kibana

Step 5: Configure the firewall

- If you have a firewall enabled, open the necessary ports. For instance, Elasticsearch defaults to port 9200, Kibana uses port 5601:

sudo firewall-cmd –add-port=5601/tcp –permanent

sudo firewall-cmd –add-port=9200/tcp –permanent

sudo firewall-cmd –reload - For Logstash, the ports that need to be opened depend on the input plugins you are using and how you have configured them. Logstash does not have a default port because it can be configured to listen on any port for incoming data, depending on the needs of your specific pipeline. Use the following example to allow arbitrary ports through your firewall:

sudo firewall-cmd –add-port=PORT_NUMBER/tcp –permanent

sudo firewall-cmd –reload

Here are a few common scenarios:

- Beats input: If you’re using Beats (like Filebeat or Metricbeat) to send data to Logstash, the default port for the Beats input plugin is 5044.

- HTTP input: If you’re using the HTTP input plugin, you might set it up to listen on a commonly used HTTP port like 8080 or 9200.

- TCP/UDP input: For generic TCP or UDP inputs, you can configure Logstash to listen on any port that suits your configuration, such as 5000.

- Syslog input: If you’re using Logstash to collect syslog messages, standard syslog ports like 514 (for UDP) are common.

Step 6: Access Kibana

After installation, you can access Kibana by navigating to http://your_server_ip:5601 from your web browser.

Additional configuration

- Configure Elasticsearch, Kibana, and Logstash as needed. Their configuration files are located in /etc/elasticsearch/elasticsearch.yml, /etc/kibana/kibana.yml, and /etc/logstash/logstash.yml, respectively.

- Secure your ELK stack with user authentication and other security measures.

Important notes

- Version numbers and repository links may change, so please refer to the official documentation for the most current information.

- Always ensure your system meets the hardware and software prerequisites for installing these components.

- It’s highly recommended to secure your ELK stack, especially if it’s exposed to the internet. This includes setting up authentication, encryption, and firewall rules.

ELK integration and use cases

The ELK stack’s integration capabilities with other tools and platforms significantly enhance its functionality and utility. Its use cases are diverse and cover various industries, including but not limited to:

- Advanced security and threat detection mechanisms.

- In-depth business intelligence and data analysis.

- Comprehensive application performance monitoring.

As the field of data analytics continues to evolve, so does the ELK stack. It adapts to new trends and developments, maintaining its relevance and effectiveness in the ever-changing landscape of IT infrastructure.

Elasticsearch, Logstash, and Kibana each bring unique and powerful capabilities to the ELK stack

ELK is indispensable for log management, analytics, and system monitoring. Its importance in the realm of IT cannot be overstated, with applications ranging from straightforward log aggregation to complex data analytics and predictive modeling.

Anyone can delve deeper into the ELK stack. A wealth of resources is available for those seeking to further their knowledge and skills, including comprehensive guides, active forums, and professional networks. The ELK stack represents not just a set of tools but a gateway to unlocking the vast potential of data in driving forward business and technological innovation.