Most development projects involve a wide range of environments. There is production, development, QA, staging, and then every developer’s local environments. Keeping these environments in sync so your project runs the same (or runs at all) in each environment can be quite a challenge.

There are many reasons for incompatibility, but using Docker will help you remove most of them. In this article, we look into Docker, application containers, and how using these tools can help you run your application anywhere you can install Docker, thus avoiding compatibility issues.

What is an application container?

An application container is a lightweight, stand-alone package that includes an application and all its dependencies, including libraries, runtime environments, and configuration files. A container holds everything the application requires to run efficiently in an isolated environment. They are designed to run on any compatible operating system and hardware by abstracting away differences in operating system (OS) distributions and sharing the host’s operating system kernel. This allows multiple isolated applications or services to run on a single host without the need for a full OS for each application.

Application containers differ from traditional virtualization in resource allocation, operating system, isolation, and scalability. Containers share resources like CPU, memory, and storage at the OS level, consuming fewer resources than virtual machines (VMs) that require a full OS for each instance. Because of this, containers start in seconds compared to VMs which can take minutes. This feature also makes containers more scalable than VMs. One place where VMs shine, however, is that they provide much stronger isolation between instances than containers because each VM runs its own operating system.

Why containerize applications?

Containerizing applications brings a great deal of benefits that make some of the challenges in software development, deployment, and operations easier to handle. Some of the top reasons to containerize an application include:

- Portability: Containerization provides a consistent runtime environment by packaging an application along with its dependencies and configuration. This ensures consistent behavior whether the container is running on a developer’s machine or on a cloud platform.

- Scalability: Containers allow for rapid deployment, patching, and scaling of applications. Container orchestrators can perform smart scaling, running just the right amount of application instances needed to serve the current application loads while accounting for the resources available.

- Productivity: Containers provide a predictable, consistent environment no matter where they run. Instead of setting up each environment manually, developers can usually run a single command to start a container, and it will build and launch the environment for them.

- Flexibility: The efficiency of containers makes it easy to break an application up into smaller, deployable microservices without the resource overhead of running multiple physical servers or even VMs.

Introduction to Docker

Docker was launched in 2013 as an open-source platform that simplifies the process of building, running, managing, and distributing applications by using containerization. For many development teams, it is a fundamental tool in their software development and deployment workflows, allowing developers to package applications with their dependencies into lightweight, portable containers.

Let’s take a quick look at some key components of Docker to get an idea of how it works.

Dockerfile

A Dockerfile is a text file that contains the instructions for building a Docker image. It specifies which Docker image to use as a base, the location of the application source code that will be bundled with the image, and the libraries, packages, and other dependencies required to run the application. Docker reads this file and executes the instructions in it to build the Docker image.

Docker images

A Docker image is a read-only template that contains the application’s source code and dependencies and serves as the foundation for Docker containers. Docker images are created with a Dockerfile and can be versioned, stored in registries, and shared with others. Docker images use a layered architecture, where each layer represents a change to the image. Because the layering is tied directly to each command in a Dockerfile, every instruction can produce a new layer

Docker containers

A Docker container is a running instance of a Docker image. Like other containers, they run in isolated environments on a host system and are lightweight, start quickly, and provide consistent behavior regardless of which host system they run on.

How to containerize an application using Docker

Many types of applications can be containerized, including frontend applications and backend applications. Next, we’ll look at how a simple Python Flask web application can be containerized. While there are other languages and applications that can be packaged with Docker, we’ll use Python Flask for our examples.

Prerequisites for Docker containerization

Due to the fact that containerizing an application packages all of its code and dependencies in a container, there really aren’t many requirements aside from the following:

- You need to have Docker installed. Find installation packages for Linux, Mac, or Windows here.

- Create a project folder to hold all the application’s files. In our example below, we will be using only three files.

- You may want to install the programming languages and libraries you’re using locally to test your application before you containerize it. However, this is not entirely necessary since you will be installing those in the application container, and Docker will be taking care of that part.

Understanding the application code and its dependencies

For our example, we’ll be creating a simple Python Flask app. Here is what the project folder will look like:

my_flask_app/

- app.py

- requirements.txt

Our application consists of two files: app.py, which holds the code for the web application, and requirements.txt, which is a file Python uses to install dependencies. Here are the contents of app.py with some comments explaining what each part does:

# Import the Python Flask module

from flask import Flask

# Flask constructor uses the name of the current module

app = Flask(__name__)

# The route() function is a decorator,

# It binds a URL to an associated function

@app.route('/')

def hello_world():

return 'Hello World'

# If the file is run directly

# run the Flask application

if __name__ == '__main__':

app.run(debug=True, host='0.0.0.0')

There is not much to the requirements.txt file. It simply lists Flask as a dependency:

Flask==2.3.2

A step-by-step guide to containerizing an application

Now that we have an application to containerize, the next step is creating a Dockerfile to hold the instructions for creating the Docker image.

Creating the Dockerfile

First, create an empty Dockerfile in the project. This file has no extension. Your project folder should look like this:

my_flask_app/

- app.py

- Dockerfile

- requirements.txt

Here are the contents of the the Dockerfile:

FROM python:3.10 COPY . /app WORKDIR /app RUN pip install -r requirements.txt EXPOSE 5000 CMD ["python3", "app.py"]

Here is what all of this means:

- FROM: This defines which container we will be using as our base container. You can find a large selection of base containers to start with at Docker Hub.

- COPY: When the container is built, the files from our project, represented by the dot, will be copied to an /app folder inside of the container.

- WORKDIR: This tells Docker where to run the command at the bottom of our file, which is the folder we copied our project to.

- RUN: The RUN instruction tells Docker a command to run when building the container. This command runs during image creation, not when the container starts. Here we tell it to install the requirements in our requirements.txt file.

- EXPOSE: This tells Docker the port the container will listen on, which is the port our Flask application will be running on.

- CMD: This tells Docker the commands that will be executed when the container is started and will run the Flask app. CMD instruction provides defaults for executing a container and can be overridden during Docker run.

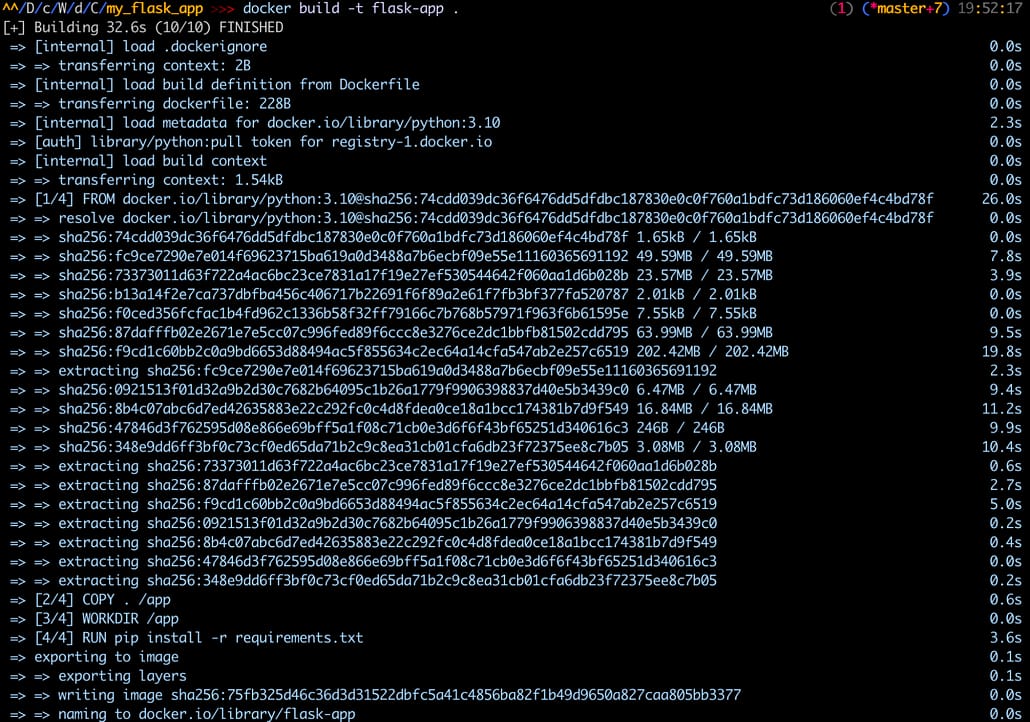

Building the Docker image

Now that we have created the Dockerfile, we can build the Docker image by running this command in our project directory:

docker build -t flask-app .

In the command, -t stands for tag and tags the image with a name, flask-app is the name that we will be giving the image, and the . defines the build context, which is the current directory.

Once you run the command, Docker will build your image, and you will see an output like this:

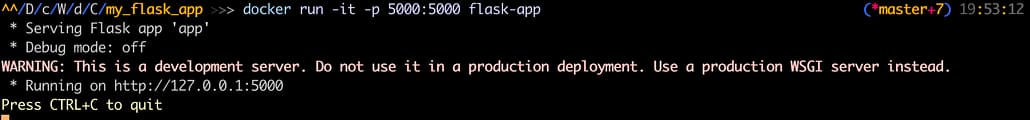

Running the Docker container

Now that the Docker image has been created, you can run the container with the following command in the project directory:

docker run -it -p 5000:5000 flask-app

In the command above, -it tells Docker to run the container in interactive mode, which means you can interact with the container’s shell and to allocate a tty to the container, which gives you a text-based console. However, use of -it when running the Flask application isn’t always necessary since it’s not a command-line tool that requires an interactive terminal. The -p flag specifies the port mapping of the container. The first 5000 is the port on the host machine you want to map to the container port. The second 5000 is the container port. And flask-app is the name of the image we just created and want to run.

The output in the terminal after you run the command will look something like this:

And when you browse to http://localhost:5000/, you will see the very basic Flask application.

Best practices for Docker containerization

While containerizing an application with Docker offers countless benefits for application development and deployment, it is important to follow best practices to take advantage of them.

Here are some important rules to follow:

- Use a minimal base image: Choose the lightest base image that is feasible, like Alpine Linux, to reduce the size of your final image, lower resource consumption, and speed up builds.

- Use multi-stage builds: Build and compile in one stage, then copy only the required artifacts into the final stage to reduce the final image size.

- Minimize layers: Reduce the number of layers in your image by combining commands into a single RUN command because each layer adds overhead.

- Clean up unnecessary files: Remove temporary files, caches, and build artifacts after installing dependencies or building the application to reduce the image size.

- Run stateless containers: Store persistent data outside of the container in databases, object storage, or another external storage system so you can shut down the container without losing data.

- Tag your images: Tags help to manage the versions of your images. Using unique tags for deployments allows you to scale a production cluster to many nodes quickly.

- Patch your containers: Patching and updating your containers is a proactive way to improve security and reduce threats.

Docker: An essential tool for modern development

Docker containerization has revolutionized application development. Containerized applications come with significant benefits, including portability, scalability, productivity, efficiency, and flexibility. Docker has made this process simple by providing a standardized and easy-to-use approach to containerization.

Embracing Docker, while making sure to follow best practices for containerization, can improve the development, deployment, and management of modern applications. By providing a consistent runtime environment, containers allow developers to develop applications once and run them anywhere, simplifying the process across various environments. The scalability of containers enables rapid deployment and scaling of applications into smaller, deployable microservices.

Docker, as a leading containerization platform, simplifies the process of building, running, managing, and distributing containerized applications, making it an essential tool for modern software development.